The story behind Taiwan’s ChatGPT

Source:Chien-Tong Wang

ChatGPT has taken off, unleashing a fierce battle among different countries’ language models, including Ernie in China and Bloom in France. A Taiwanese version of ChatGPT is taking shape. Here’s a look at who’s behind it and its potential.

Views

The story behind Taiwan’s ChatGPT

By Elaine Huangweb only

At the end of February, fabless IC giant MediaTek declared a major artificial intelligence (AI) milestone.

“We can finally put out a traditional Chinese large language model. Right now, we’ve reached the 1 billion parameter level,” wrote Shiu Da-shan (許大山), the managing director of MediaTek’s forward-thinking unit, MediaTek Research Lab, in a Facebook post.

“Even though the 100 billion level is still pretty far away, [the model] can already generate a stable output of meaningful nonsense.”

ChatGPT has triggered a large language model (LLM) boom, with Google and Meta publicly releasing their own models. Many in the industry expect a multitude of tech apps in the future will be dominated by a few American tech companies, just as cloud services are dominated by Amazon’s AWS, Microsoft Azure and Google Cloud, but they believe large language model players will wield far greater influence.

This trend has seemingly overnight sparked a global arms race to dominate large language models. China has developed WuDao and Baidu’s “Ernie” (Enhanced Representation through kNowledge IntEgration), France has Bloom, and South Korea has Hyper Clova.

Not wanting to be left behind, Taiwan has released the world’s first AI language model using traditional Chinese characters that can be used for free. Since it was opened to AI developers in February, it has been downloaded more than 3,400 times.

This traditional Chinese language model, dubbed the “Taiwanese GPT,” was developed using the Bloom open source language model by MediaTek Research led by Shiu in collaboration with Academia Sinica’s CKIP Lab and the National Academy for Educational Research (NAER).

MediaTek: All in on generative AI

A large language model is a deep learning algorithm with many “parameters,” often calculated in the hundreds of billions, that is trained by going through huge amounts of information in a specific language to perform a wide range of tasks.

Most LLMs in the past have been focused on English, and the Chinese systems have been geared to simplified characters used in the People’s Republic of China (PRC). LLMs based on the traditional Chinese characters used in Taiwan have been few and far between.

Moreover, because OpenAI’s GPT (generative pre-trained transformer) language model is not open source, access can only be obtained through a GPT’s API connection, which means users do not have control over the GPT models themselves to further their own research.

“The initiative is not with us users of traditional Chinese characters. We feel kind of powerless,” said Shiu, who headed the development of signal processing algorithms for cellular networks at Qualcomm during the 3G era and taught electrical engineering at National Taiwan University before joining MediaTek in 2011.

Shiu Da-shan (Source: Chien-Tong Wang)

Shiu Da-shan (Source: Chien-Tong Wang)

Under his leadership, MediaTek Research has focused primarily on AI research, first setting up shop at Cambridge University in England to recruit local talent. A major AI hub, Cambridge is home to the Samsung AI Center and even the United Kingdom’s most powerful supercomputer launched by Nvidia.

When OpenAI introduced GPT-3 in 2020, it was a gamechanger for Shiu. GPT-3’s deep learning neural network, which has 176 billion machine learning parameters, was a huge step from previous trained language models of 10 billion parameters, convincing Shiu he needed to immerse the company in generative AI. He began shifting more manpower into research in this field.

“AI’s contribution to productivity was at a completely different level. It was a huge jump,” Shiu said. At the time, of MediaTek Research Lab’s more than 20 researchers, half were moved into generative AI.

In May 2022, MediaTek Research connected with Academia Sinica’s CKIP Lab to develop a traditional Chinese large language model.

NAER: Feeding the beast

In fact, in 2019, the CKIP Lab used Google’s BERT language model and OpenAI’s GPT-2 to build a traditional Chinese model, but was limited by the lack of information that could be fed to the model to learn from, in stark contrast with mainstream LLMs.

To address the problem, the CKIP Lab brought in the NAER, which had accumulated massive amounts of traditional Chinese publications and could provide high-quality texts for machine learning. It then pulled information from news websites, internet platforms, thesis databases and put it into the open-source Bloom LLM to train and optimize its model.

These abundant resources increased the original model’s information by 1,000-fold. Shiu’s team then released the model for downloading to be used in applications such as ChatGPT for Q&As, text editing, and text generation.

The innovative center of Mediatek. (Source: Chien-Tong Wang)

Previously trained by MediaTek’s own data center, the traditional Chinese language model remains small, but if it continues to be trained and expands, it will require more computing power.

“We can move forward with our base model and more computing power,” Shiu said.

TWS: Taiwan’s computing powerhouse

Taiwan’s most powerful computing resource is the National Center for High-Performance Computing’s “Taiwania 2” supercomputer. Shiu contacted the center to discuss a partnership.

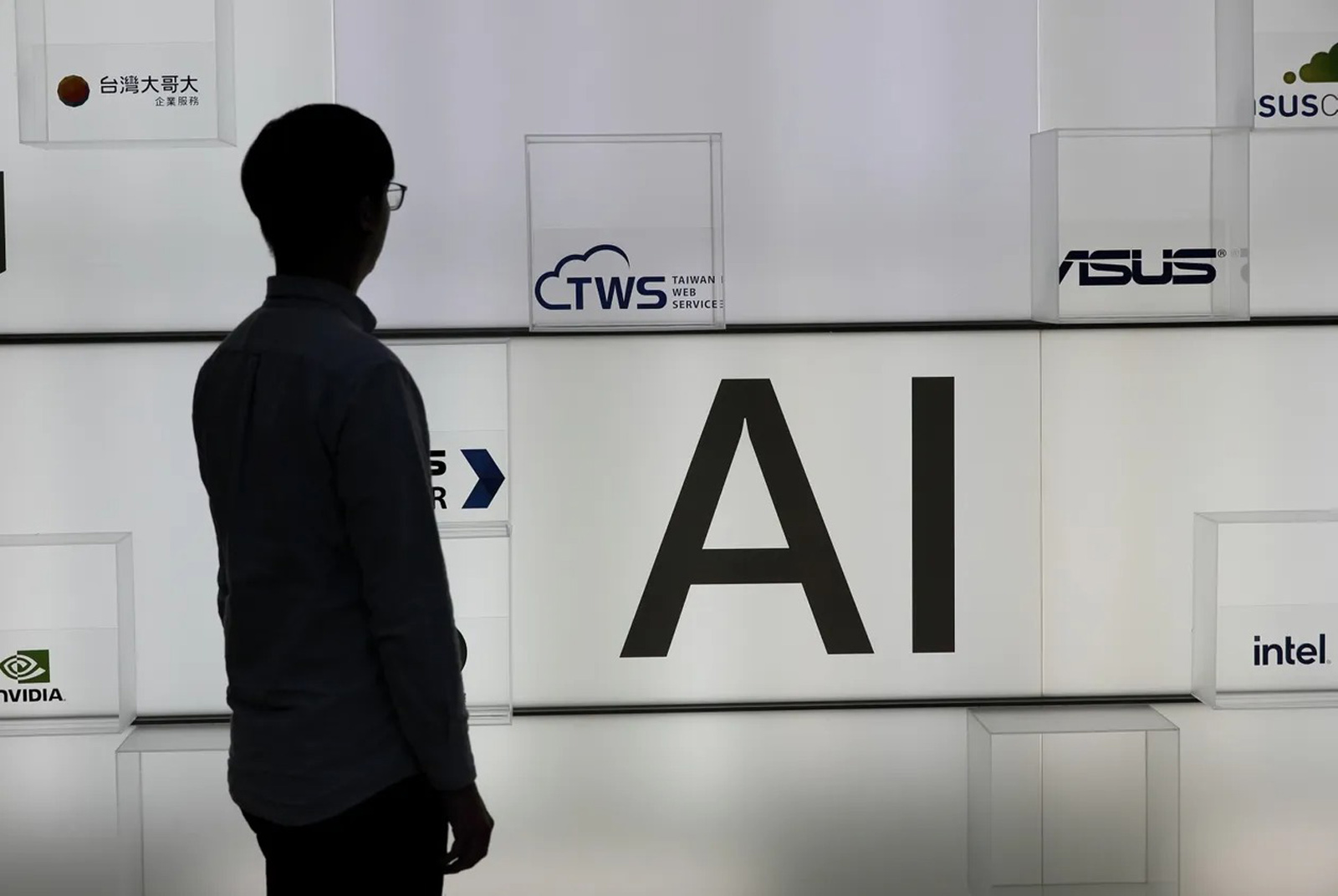

“If Taiwan hadn’t planted these seeds in 2017, it would have had a very hard time keeping up with the generative AI wave led by ChatGPT,” said Peter Wu (吳漢章), the general manager of Taiwan Web Service, which is responsible for running and maintaining Taiwania 2.

At the end of February, the National Center for High-Performance Computing openly ran Bloom on Taiwania 2, demonstrating that it had the ability to run a GPT-3-level large language model with 176 billion parameters, boosting its reputation.

“The AI war is being fought over a system’s computing power. If you have computing power, you have the chance to extend it to the industrial supply chain,” Wu said.

Located in Hsinchu, Taiwania 2 is the product of a four-year, NT$5 billion National Science and Technology Council project launched in 2017 and was forged by Quanta Computer, Asustek Computer and Taiwan Mobile.

In 2018, in a ranking of the world’s 500 biggest supercomputers, Taiwania 2’s computing speed of 9 quadrillion floating-point operations per second (9 petaFLOPs) ranked among the top 20.

When the development plan ended in 2022, Taiwan Web Service (TWS), a part of the Asus ecosphere, took over responsibility for the supercomputer. Wu, who had headed Asus Cloud, took over as TWS’ general manager.

Peter Wu (Source: Chien-Tong Wang)

Peter Wu (Source: Chien-Tong Wang)

An internal team working on natural language processing (NLP) related to Asus’ developmental service robot, called Zenbo, took over responsibility for TWS, in part because of its familiarity with language models.

TWS originally anticipated developing its business in three fields – genetic analysis, precision medicine and digital twins – but the emergence of ChatGPT and the huge demand it triggered for computing power for large language models changed its plans.

It established a new business model, positioning itself as a “computing power provider,” much as TSMC offered chip-making capacity so that IC design companies could focus on chip design.

Some within the tech circles have voiced skepticism over the plan. An executive at a major server vendor told CommonWealth bluntly that no matter how much money Taiwan’s public and private sectors inject into the field, they could never reach the scale of funding achieved by Google or Meta to nurture a large language model and supercomputer.

Aware of but not daunted by the skepticism, Wu Tsung-tsong (吳政忠), a minister without portfolio in Taiwan’s government responsible for technology issues, said he was recruiting experts to help generate a traditional Chinese model by the end of the year.

The reason for that, he said, was his perception of a trend toward a bifurcation in democratic and totalitarian societies. He voiced concern that the huge amount of simplified Chinese information being fed to ChatGPT and China’s development of the “WuDao” and “Ernie” simplified Chinese large language models could lead to a bias toward narratives catering to the People's Republic of China.

Supercomputer commercial opportunities

Yet model training requires massive computing resources not available to the average enterprise.

“It requires a large company to lead the way and build a large-scale ecosphere to develop foundational models,” said Cheng Wen-huang, a professor in National Taiwan University’s Department of Computer Science & Information Engineering, at a forum on artificial intelligence in March.

TWS’ Peter Wu said Taiwania 2 could help support such an initiative while also exporting Taiwan’s computing services.

One of the three Supercomputers that can be commercialized is in Taiwan. (Source: TWS)

One of the three Supercomputers that can be commercialized is in Taiwan. (Source: TWS)

Of the world’s top 100 supercomputers, no more than 25 are capable of commercial operations. Of those, seven belong to oil companies, three are owned by AI hardware and software specialist Nvidia, four are used by Microsoft to serve OpenAI, and the other five belong to Russian internet company Yandex.

“Would you dare use Russian supercomputers? We live in two different worlds, and there’s no way we would use them for military or national defense purposes,” Wu said.

There are two other commercial supercomputers owned by South Korean entities Samsung and SK Telecom. “Looking at Asia, there are only three supercomputers that can be used commercially. Two are in South Korea, one is in Taiwan,” Wu said.

Just as TSMC’s clients are not limited to Taiwan IC design companies, TWS computing services can look to overseas markets. One overseas entity has recently sought out TWS, hoping to use Taiwania 2 for gene sequencing.

As Wu said, TWS is planning to expand its computing power so that “ Taiwan’s computing power can be exported overseas in the future.”

Whether developing LLMs or selling computing capacity, Taiwan appears intent on emerging as a player in these AI fields.

Have you read?

- ChatGPT servers powered by Taiwan's Lite-On

- AI: A Wave Taiwan Must Ride

- AI Supports Corporate Decision-Making

Translated by Luke Sabatier

Uploaded by Ian Huang